Definition¶

An AI challenge is a specification of a problem that should be solved employing AI applications. It defines the functional and non-functional specifications as well as the input/output inferfaces.

Create an AI Challenge¶

The steps to create an AI challenge that provides specifications, interfaces and description are the following:

Create a Gitlab repo for the challenge¶

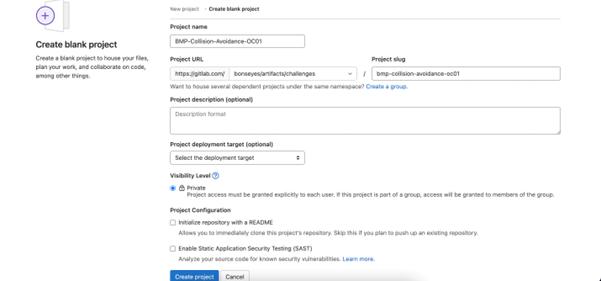

Each challenge needs to have its own repository in GitLab in https://gitlab.com/bonseyes/artifacts/challenges. See below an example for the repository creation:

LPDNN full stack¶

Naming Convention for Challenge Repository: The challenge repository name should follow a naming convention which is:

OrganizationAcronym-ChallengeName-ChallengeAcronym

where:

OrganizationAcronym: Organization to which the challenge belongs. For all BonsApps challenges, this acronym will be “BMP”

ChallengeName: Name of the challenge. If the challenge name has multiple words each word should start with a capital letter with a hyphen (“-”) in between the words.

ChallengeAcronym: This last part can be used as a distinguisher for challenges in case they belong to the same organization and have the same names (multiple challenges with the same name). In such cases this acronym serves as the challenge ID. In such cases this acronym serves as the challenge ID, e.g., OC1, OC2.

Add the challenge definition pdf inside the /docs directory¶

Each challenge is defined using a presentation/pdf where all the details about the challenge are mentioned, including input and output definitions. This presentation/pdf is required to be added to the Gitlab repository inside the “/docs” directory. Refer to Bonseyes team to provide an AI Challenge presentatio template.

Define the input and result JSON schemas based on the challenge definition¶

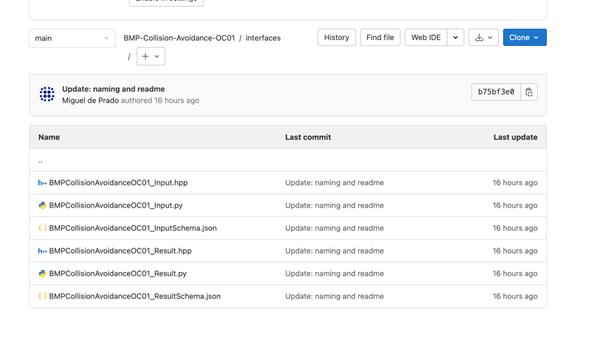

Based on the input and result specs defined in the challenge definition document (PDF), the challenge provider needs to create the input and result JSON schemas and add them to the “/interfaces” directory of the challenge repository. To know more on how to create JSON schemas, please refer to the link.

Naming convention for the files inside /interfaces: All the files inside the /interfaces directory should follow a naming convention which is:

Input Schema: FullChallengeNameinPascalCase_InputSchema.json

Result Schema: FullChallengeNameinPascalCase_ResultSchema.json

Input Python Interface: FullChallengeNameinPascalCase_Input.py

Input C++ Interface: FullChallengeNameinPascalCase_Input.hpp

Result Python Interface: FullChallengeNameinPascalCase_Result.py

Result C++ Interface: FullChallengeNameinPascalCase_Result.hpp

Figure below shows the contents of the /interfaces directory. An example of the files inside the /interfaces can be referred from the challenge example.

/interfaces directory containing the schemas and interfaces for python and C++¶

Generate the python and C++ interface files from the JSON schemas¶

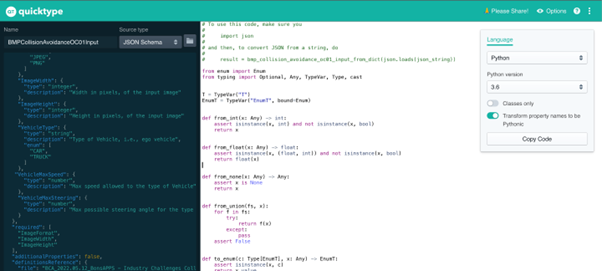

Once the JSON schemas for input and result are created, the corresponding python and c++ interface files can be generated using the QuickType Tool.

Generating python interface file¶

To generate the python interface file, follow the steps below:

Select the Source Type to “JSON Schema” in the left menu of quick type UI.

Put the Name to FullChallengeNameinPascalCase+[Input/Result] in the left menu of quick type UI and copy the JSON schema contents.

Select the language as python and python version as 3.6 in the right menu of the quick type UI and only check the “Transform property names to be Pythonic” button.

This will generate the interface file contents in the middle section of the quick type UI which can be copied with the “Copy Code” button in the right menu. Figure below shows an example for the python interface file generation from the JSON schema.

Python interface generation from JSON Schema file¶

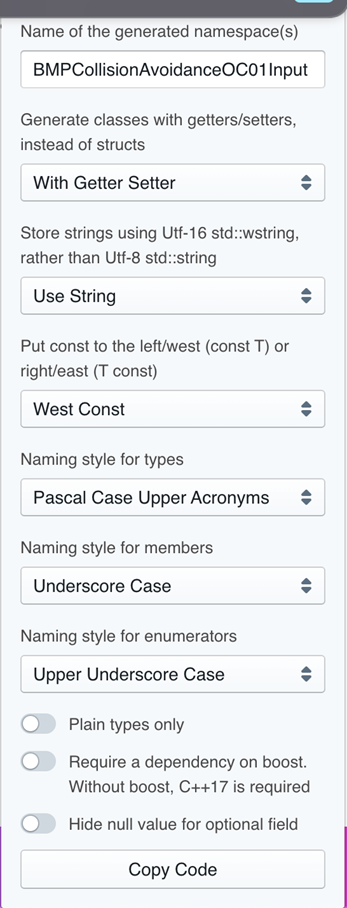

Generating C++ interface file¶

To generate the C++ interface file, follow the steps below:

Select the Source Type to “JSON Schema” in the left menu of quick type UI.

Put the Name to FullChallengeNameinPascalCase+[Input/Result] in the left menu of quick type UI and copy the JSON schema contents.

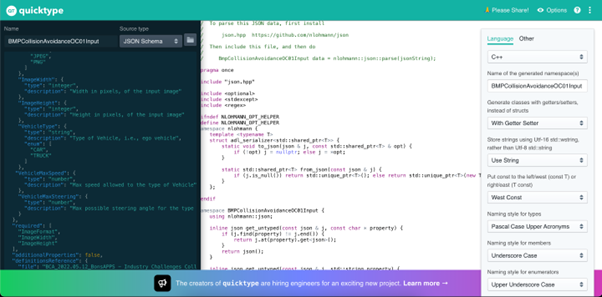

Select the language as C++ and put the Name of the generated namespace(s) same as the name used in left menu for the previous step. For rest of the settings, use the settings from the Figure below:

Setting for C++ interface generation from JSON schema¶

This will generate the interface file contents in the middle section of the quick type UI which can be copied with the “Copy Code” button in the right menu. Figure below shows an example for the C++ interface file generation from the JSON schema:

C++ interface generation from JSON schema¶

Note: To ensure that the generated C++ interface file is compatible with C++14 (used in LPDNN), a quick check can be made with g++ compiler to make sure there are no compilation issues. To do this, the JSON C++ header needs to be downloaded from JSON Header and saved in the interfaces directory as “json.hpp”.

Then the command to use is:

$ g++ -Wall -Werror -std=c++14 -I <path to interfaces DIR> <PATH to Interface file>

Create the input and result example JSON files¶

Based on the input and result JSON schemas, the example input, and result JSON files needs to be created providing an example of what the input and result should look like in the JSON format. These files should be uploaded to the “/examples” directory of the challenge repository.

Create and add the README.md to the challenge repository root¶

Challenge provider needs to add the README file to the challenge repository root which covers the small description, the news, and the links to suitable datasets/datatools and the eval tool if available (point to those datasets/datatools created by the AI Talents). An example of the readme can be referred from Challenge README Example.

Creating the LPDNN challenge¶

This guide assumes you already have the done the following:

Setup the local environment as explained in Prerequisites

The workflow of creating a LPDNN AI Challenge is as follows:

Creating the LPDNN challenge metadata

Defining the LPDNN challenge interface

The following steps can be skipped for the moment:

Defining the challenge training and sample data (Deprecated),

Defining the evaluation procedure (Deprecated)

A template of the LPDNN challenge YML file is available in the AI Asset Container Generator.

The challenge artifact is stored in a file challenge.yml that contains all the information on the challenge.

This schema of the challenge.yml is described below.

Schema for Bonseyes Challenges¶

type |

object |

|||||

properties |

||||||

|

||||||

|

Challenge specification |

|||||

A challenge specification is built from three objects. interface are dynamically validated against an external schema specifying the required parameters for this challenge. training_data and sample_data are required by all challenges. |

||||||

type |

object |

|||||

properties |

||||||

|

Defines a type and references and external schema that has to be dynamically validated the contents are then inside the value object that is generic and needs to be filled according to the referenced schema See types/image-classification.yml for an example of such an external schema Automated dynamic loading and validation of unknown external schemas is not supported by json-schema at this point. |

|||||

type |

object |

|||||

properties |

||||||

|

ID that uniquely identify the AI app interface. |

|||||

type |

string |

|||||

|

type |

object |

||||

additionalProperties |

False |

|||||

|

Dataset used to train the ai application |

|||||

data.yml |

||||||

|

Small dataset that can be use to test the AI app |

|||||

data.yml |

||||||

|

Dataset that can be use to quantize model after training |

|||||

data.yml |

||||||

additionalProperties |

False |

|||||

|

Deployment specification |

|||||

type |

object |

|||||

properties |

||||||

|

ID of the platform |

|||||

type |

string |

|||||

|

type |

object |

||||

additionalProperties |

False |

|||||

|

Path to a pre-trained model |

|||||

type |

string |

|||||

|

Describes the evaluation procedure to execute and the criteria to consider the evaluation procedure successful. |

|||||

type |

object |

|||||

additionalProperties |

type |

object |

||||

properties |

||||||

|

type |

object |

||||

properties |

||||||

|

ID of the evaluation tool to use for the evaluation |

|||||

type |

string |

|||||

|

Parameters for the evaluation tool |

|||||

type |

object |

|||||

additionalProperties |

False |

|||||

|

type |

object |

||||

additionalProperties |

data.yml |

|||||

|

data.yml |

|||||

|

type |

array |

||||

items |

type |

object |

||||

properties |

||||||

|

Name of the metric on which the constraint apply (as generated by the evaluation protocol). |

|||||

type |

string |

|||||

|

The condition to be satisfied, currently the following are supported. |

|||||

type |

string |

|||||

enum |

smaller_than, larger_than, equal_to |

|||||

|

Value of the metric |

|||||

type |

number |

|||||

additionalProperties |

False |

|||||

additionalProperties |

False |

|||||

additionalProperties |

False |

|||||

A minimal algorithm configuration looks as follows:

metadata:

title: <name> Challenge

description: |

Description

specification:

interface:

id: com_bonseyes/interfaces#<challenge_id>

parameters:

classes: classes.txt

Creating the LPDNN challenge metadata¶

Once you have created a challenge.yml based on the template, you can start implementing the challenge itself. The first part of the challenge that needs to be completed is the metadata. The minimum metadata required for a challenge is the following:

metadata:

title: Title of the Challenge

description: |

Description of the challenge

The description of the challenge can be a multiline string, just make sure the indentation is consistent. It is recommended to use a YAML editor (for instance the one included in PyCharm) to make sure you don’t make syntax mistakes.

Defining the LPDNN challenge interface¶

Once the metadata for the challenge is defined you need to define the interface that is expected to be implemented by the resulting AI App. The interface of an AI app defines the inputs and outputs.

The Bonseyes eco-system provides a number of pre-defined parametrized interfaces that are supported by the deployment tool and address common use cases. These interfaces can be used without having to develop any custom embedded code. Other interfaces can be defined if necessary, but require to write a corresponding extension for the deployment tool.

An interface artifact is defined in a file interface.yml and available in the deployment packages under ${deploymentPackage}/tools/ai_app_generator/share/interfaces. The schema of this file is described below:

Bonseyes Interface Description¶

type |

object |

|

properties |

||

|

Deployment metadata |

|

|

Path to schema for the AI Class parameters in the challenge |

|

type |

string |

|

|

Path to schema for the JSON serialization of the results |

|

type |

string |

|

|

Path to schema for the JSON serialization of the ground truth used to train this class |

|

type |

string |

|

|

Path to Swagger file of the HTTP API for the AI class |

|

type |

string |

|

The challenge defines the interface of the AI app by adding the following section:

specification:

interface:

id: name of the interface

parameters: parameters of the interface

Default Interfaces¶

The following interfaces are distributed by default with bonseyes:

Image Classification (id:

com_bonseyes/interfaces#image_classification): given an image provide the probability of the image to belong to one of a set classes. Find more information here: Image Classification.Object Detection (id:

com_bonseyes/interfaces#object_detection): given an image find the bounding boxes of a set of classes of objects and optionally for each object provide the position of landmarks of the object. Find more information here: Object Detection.Face Recognition (id:

com_bonseyes/interfaces#face_recognition): given an image of a face create a fingerprint that can be used to identify the face. Find more information here: Face Recognition.Image Segmentation: (id:

com_bonseyes/interfaces#image_segmentation): given an image compute a segmentation mask and confidence of the detected objects classes. Find more information here: Image Segmentation.Audio Classification (id:

com_bonseyes/interfaces#audio_classification): given an audio snippet provide the probability of the snipped belong to one of a set classes of audio snippets (e.g. keywords). Find more information here: Audio Classification.Signal classificaiton (id:

com_bonseyes/interfaces#signal_classification): given a signal stream compute the confidence of each possible signal class. Find more information here: Signal Classification.

Depending on the type of AI-app that is selected different parameters need to be provided in the challenge file. In the next subsections we describe the details for each type.

Image classification¶

An image classification challenge aims at providing the probability of the input image to belong to one of a set classes.

A minimal challenge.yml file for an image classification challenge looks as follows:

metadata:

title: My Sample Challenge

description: |

This challenge is a sample challenge

specification:

interface:

id: com_bonseyes/interfaces#image_classification

parameters:

classes: classes.txt

The specification.interface.parameters.classes parameter must name a path to a text file (relative to the position of the challenge.yml file) that contains on each line a label for a class. The text file is expected to be encoded in UTF-8 and use UNIX line endings.

Object detection¶

An object detection challenge aims at finding the bounding boxes of a set of classes of objects, and optionally for each object, provide the position of landmarks of the object.

A minimal challenge.yml file for an object detection challenge looks as follows:

metadata:

title: My Sample Challenge

description: |

This challenge is a sample challenge

specification:

interface:

id: com_bonseyes/interfaces#object_detection

parameters:

classes: classes.txt

landmarks: landmarks.txt

The specification.interface.parameters.classes parameter must name a path to a text file (relative to the position of the challenge.yml file) that contains on each line a label for a class. The text file is expected to be encoded in UTF-8 and use UNIX line endings.

The specification.interface.parameters.landmarks parameter is optional and if present must name a path to a text file (relative to the position of the challenge.yml file) that contains on each line a label for a class. The text file is expected to be encoded in UTF-8 and use UNIX line endings.

Face recognition¶

A face recognition challenge aims at creating a fingerprint that can be used to identify the face given an input image.

A minimal challenge.yml file for a face recognition challenge looks as follows:

metadata:

title: My Sample Challenge

description: |

This challenge is a sample challenge

specification:

interface:

id: com_bonseyes/interfaces#face_recognition

Image segmentation¶

An image segmentation challenge aims at identifying a mask to segment each pixel of an input image based on the class they belong to.

A minimal challenge.yml file for a image segmentation challenge looks as follows:

metadata:

title: My Sample Challenge

description: |

This challenge is a sample challenge

specification:

interface:

id: com_bonseyes/interfaces#image_segmentation

parameters:

classes: classes.txt

The specification.interface.parameters.classes parameter must name a path to a text file (relative to the position of the challenge.yml file) that contains on each line a label for a class. The text file is expected to be encoded in UTF-8 and use UNIX line endings.

Audio classification¶

An audio classification challenge aims at providing the probability of the audio snippet to belong to one of a set classes.

A minimal challenge.yml file for an audio classification challenge looks as follows:

metadata:

title: My Sample Challenge

description: |

This challenge is a sample challenge

specification:

interface:

id: com_bonseyes/interfaces#audio_classification

parameters:

classes: classes.txt

The specification.interface.parameters.classes parameter must name a path to a text file (relative to the position of the challenge.yml file) that contains on each line a label for a class. The text file is expected to be encoded in UTF-8 and use UNIX line endings.

Signal classification¶

An signal classification challenge aims at providing the probability of the signal stream to belong to one of a set classes.

A minimal challenge.yml file for an signal classification challenge looks as follows:

metadata:

title: My Sample Challenge

description: |

This challenge is a sample challenge

specification:

interface:

id: com_bonseyes/interfaces#signal_classification

parameters:

classes: classes.txt

The specification.interface.parameters.classes parameter must name a path to a text file (relative to the position of the challenge.yml file) that contains on each line a label for a class. The text file is expected to be encoded in UTF-8 and use UNIX line endings.

Defining the challenge training and sample data (Deprecated)¶

In its simples form training data can be attached to the challenge by adding the following sections to the challenge:

specification:

interface: ...

training_data:

sources:

- title: title of the first dataset

url: url of the dataset

description: |

Multiline description of the first dataset

- title: title of the another dataset

url: url of the other dataset

description: |

Multiline description of the other dataset

sample_data:

sources:

- title: title of the dataset

url: url of the dataset

description: |

Multiline description of the dataset

Both training and sample data may have multiple sources. If the data is distributed with the challenge (that is in

the same package), you can put a relative path to the challenge.yml file as the url of the dataset. Data described

in this form needs to be manually downloaded by the challenge user.

For each data item it is possible to include data tools that allow the challenge user to automatically download and transform the data. Data tools can be created using the process described in /pages/dev_guides/data_tool/create_data_tool.

The data tools are reference as follows:

sample_data:

tool:

id: com_example/mydatatool#data_tool.yml

parameters: optional parameters

input_data: optional path to a directory with input data for the data tool

sources:

- title: title of the dataset

url: url of the dataset

description: |

Multiline description of the dataset

The id property of the data tool is formed by the name of the package containing the data tool metadata and the path

to the file name. The parameters is optional and is an object compliant with the parameters schema provided by the

data tool. The input_data property is required only by data tools that require it in their metadata, it points to a

directory containing the input data for the data tool.

Defining the evaluation procedure (Deprecated)¶

The last part of the challenge that can be defined is the evaluation procedure. This section defines the evaluation tool to be used to check the performance of the AI app deployed on the target hardware. The evaluation tool is capable of generating a number of metrics, this section allows to specify constraints on these metrics that must be met by the AI apps.

Most of the time the evaluation procedure requires some data to be carried out, the so called evaluation data. This section allows to define the evaluation data as well. Since the evaluation tool expects data in a pre-defined format the section allows also to define a data tool capable of transforming the evaluation data in this target format. This allows to support arbitrary input data formats. For more information about how to specify data you can check the section Signal classification, the same syntax used for training and sample data applies to the evaluation data.

The general format of the evaluation section of the challenge is the following:

evaluation_protocol:

name_of_procedure:

tool:

id: id of the evaluation tool

parameters: optional parameters of the evaluation tool

data:

name_of_the_data:

tool:

id: id of the data tool

parameters: optional parameters of the data tool

input_data: optional directory containing input data for the data tool

sources: # optional description of the dataset

- title: title of the evaluation dataset

url: original url of the dataset

description: |

Multiline description of the dataset

constraints:

- metric: accuracy

condition: larger_than

value: 0.99

- metric: memory

condition: smaller_than

value: 1000

- metric: latency

value: smaller_than

value: 100

The evaluation_protocol.{name_of_procedure}.data.{name_of_the_data}.sources section has the same structure as

the corresponding sections in the training and sample data and it is optional. The name_of_procedure property can

be chosen by the challenge writer, multiple evaluation procedures in the same challenge. The section

evaluation_protocol.{name_of_procedure}.data.{name_of_the_data}.tool can be substituted with a single property

evaluation_protocol.{name_of_procedure}.data.{name_of_the_data}.dataset that points to a directory containing

the data in a already standardized format.

The Bonseyes platform provides some pre-defined evaluation tool for typical evaluations. The evaluation tools available are the following:

Image Classification Evaluation Tool (id:

com_bonseyes/challenge_tools#evaluation/image_classification): measures accuracy and latency of a Image Classification AI app using a benchmark dataset.Object Detection Evaluation Tool (id:

com_bonseyes/challenge_tools#evaluation/object_detection): measures mAP and latency of a Object Classification AI app using a benchmark dataset.

The details of the tools are described in the next paragraphs. Custom evalution tools can be defined following the instructions in /pages/dev_guides/eval_tool/create_evaluation_tool

The image classification evaluation tool allows to measure accuracy and latency of a image classification ai app.

The minimal challenge file to use this evaluation tool is the following:

metadata:

title: My Sample Challenge

description: |

This challenge is a sample challenge

specification:

interface:

id: com_bonseyes/interfaces#image_classification

parameters:

classes: classes.txt

evaluation_protocol:

evaluation:

tool:

id: com_bonseyes/challenge_tools#evaluation/image_classification

data:

evaluation_data:

dataset: benchmark_data

The sections metadata and specification are explained above. The evaluation protocol section describes a single

evaluation procedure called evaluation. It uses the image classification evaluation tool and the data present in the

directory benchmark_data relative to the challenge.yml file.

The data in the directory must have the following format:

The directory must contain a file

dataset.jsonwith the following format:{ "samples": [ { "id": "id_of_image1", "class": "index of the class as defined in the classes.txt", "image": "path to the image relative to directory containing the dataset.json file" }, { "id": "id_of_image2", ... } ] }

The directory must contain all the images to be tested in either PNG or JPEG format.

The object detection evaluation tool allows to measure mAP and latency of an object detection ai app.

The minimal challenge file to use this evaluation tool is the following:

metadata:

title: My Sample Challenge

description: |

This challenge is a sample challenge

specification:

interface:

id: com_bonseyes/interfaces#object_detection

parameters:

classes: classes.txt

evaluation_protocol:

evaluation:

tool:

id: com_bonseyes/challenge_tools#evaluation/object_detection

data:

evaluation_data:

dataset: benchmark_data

The sections metadata and specification are explained above. The evaluation protocol section describes a single

evaluation procedure called evaluation. It uses the image classification evaluation tool and the data present in the

directory benchmark_data relative to the challenge.yml file.

The data in the directory must have the following format:

The directory must contain a file

dataset.jsonwith the following format:{ "samples": [ { "id": "id_of_image1", "image": "path to the image relative to directory containing the dataset.json file" "bb": [ { "class_index": "index of the class of the first object as defined in classes.txt", "bounding_box": { "origin": { "x": minimum x of bounding box, "y": minimum y of bounding box }, "size": { "x": width of bounding box, "y": height of boudning box } } }, { "class_index": "index of the class of the second object as defined in classes.txt", ... } ], }, { "id": "id_of_image2", ... } ] }

The directory must contain all the images to be tested in either PNG or JPEG format.

Upload Challenge¶

THIS SECTION IS IN PROGRESS

This guide assumes you already have the done the following:

Setup the local environment as explained in Prerequisites

Created (see create_challenge) or obtained a challenge and stored it in the directory

challengeCreated a git repository on Gitlab that will host the challenge

Enrolled your gitlab user in the marketplace

To upload a challenge you need to do the following:

Create your author keys:

$ bonseyes license create-author-keys --output-dir author_keys

Package your challenge for release with its dependencies and a redistribution licenses:

$ bonseyes marketplace package-challenge \ --pull-images \ --challenge challenge \ --author-keys author_keys \ --amount "100 CHF" \ --recipients "test@dd.com,tost@dd.com" \ --regions https://www.wikidata.org/wiki/Q39,https://www.wikidata.org/wiki/Q40 \ --output-dir packaged_challenge \The

--recipientsparameter can be used to restrict who can see the artifact on the marketplace, if omitted all users can see it.The

--regionsparameter can be used to restrict the regions in which the artifact can be seen, if omitted users of all regions can see it.Commit the packaged challenge in a gitlab repository that is accessible by the gitlab user you enabled in the marketplace

Upload the challenge to the marketplace:

$ bonseyes marketplace upload-challenge --url $HTTP_URL_OF_GITLAB_REPO

The challenge will be added to your profile.